📚 Part_300: X86_64 Memory

Introduction

In this post, we will briefly explain memory management in x86_64 CPUs, which will be necessary to understand at least the basics for setting up the VMCS (Virtual Machine Control Structure) of our minimal hypervisor.

Understanding how memory management works becomes critical when we begin setting up the VMCS for virtualization. The VMCS contains a set of control fields that configure how the virtual CPU behaves in the hypervisor, including how the guest operates concerning memory management and how the host controls memory access.

The guest flags in the VMCS specify the memory management setup for the guest VM. For example, flags in the VMCS can dictate whether the guest operates in protected or long mode and how it handles paging. The host flags, on the other hand, configure the behavior of the host when the guest is running, such as handling transitions between guest and host modes and managing access to physical memory.

As we set up the VMCS in our minimal hypervisor, we'll need to ensure that the guest and host flags are correctly configured to allow proper memory management, virtualization, and safe execution of guest code.

Why CPU Uses Paging

In this section, we will explore paging, why the kernel relies on it, and the advantages it offers. We'll focus specifically on the x86 architecture. The need for paging arises from a fundamental requirement of operating systems: memory isolation. The memory of one process should never interfere with another. For instance, the text you type in your email client shouldn't be overwritten or corrupted by activity in your web browser.

Segmentation

The demand for memory protection led to the introduction of memory segmentation in 1978, initially to expand addressable memory. By 1982, segmentation was also used for memory isolation. However, segmentation gradually fell out of favor until it was abandoned in 2003 (except in a few special cases) and replaced by a more robust solution: paging, which had been introduced in 1985.

We won't go deep into segmentation in this section. Still, to understand why it was eventually phased out, we must first introduce two key ideas: virtual memory and memory fragmentation.

The concept of virtual memory is abstracting the physical memory addresses from the software. Instead of accessing physical memory directly, the CPU uses virtual addresses, which are translated to physical addresses through a process managed by the Memory Management Unit (MMU).

In the segmentation approach, this translation involves a segment

selector pointing to a segment descriptor containing a base address, and

the virtual address is calculated by adding an offset to that base. For

example, if you want to access a virtual memory address

0x1111110 with a segment offset of 0x1000000,

the physical (or linear) address you access on the hardware is

0x2111111.

On modern systems, segmentation is mainly unused, but we will see in the next post that segmentation is used for CPU segment registers in the context of VMX/VMCS and needs to be understood correctly to set the guest and host flags.

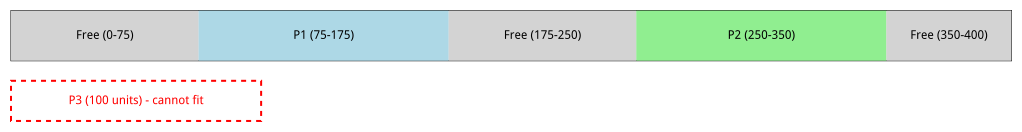

Segmentation offers a flexible way to place programs in memory. Each program can start at an arbitrary physical address defined by its segment base. However, this flexibility introduces a serious problem. Imagine we have 400 physical memory units and want to run four programs, each requiring 100 units. The first program is placed at addresses 75–175 and the second at 250–350. Now, we want to load the third program, which also needs 100 memory units. Despite having 200 units of free memory (from 0–75, 175–250, and 350–400), no contiguous block of 100 units is available. As a result, the third program cannot be loaded unless we stop execution and move other programs around to defragment the memory.

This problem is called external fragmentation, where enough total memory exists, but it's broken into smaller chunks that aren't individually useful.

Paging

Paging was introduced to solve the problem of fragmentation caused by segmentation. In paging, virtual and physical memory are divided into fixed-size blocks called pages in virtual memory and page frames in physical memory.

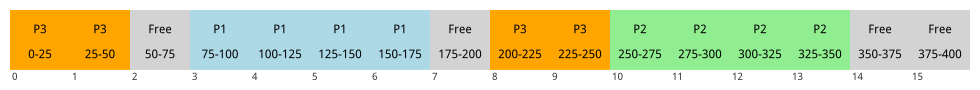

Let's return to our earlier example. Suppose we have 400 units of physical memory and choose a page size of 25, giving us 16 physical pages. Each program requires 100 units of memory, which translates to 4 pages. Now, let's say the first program is placed at addresses 75 to 175, covering pages 3 to 7, and the second program is placed at addresses 250 to 350, occupying pages 10 to 14. The remaining free pages are scattered: 0 to 2, 8 to 9, and 15.

With segmentation, a third program requiring 100 units would have difficulty finding a single continuous memory block large enough to fit. But with paging, that continuity isn't necessary. The third program can be loaded into four available pages, perhaps pages 0 and 1 (covering 0 to 50) and pages 8 and 9 (covering 200 to 250), and the fourth can use the same mechanism. The memory management unit handles all the mapping between the virtual and physical page frames, allowing the program to run as though its memory is contiguous, even though it's spread across fragmented areas.

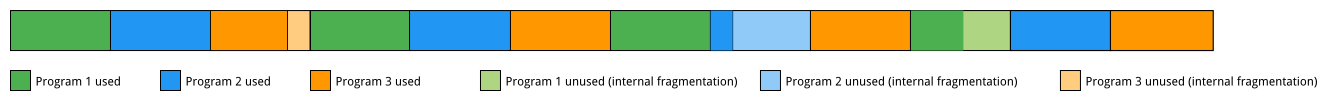

Paging eliminates external fragmentation, but it doesn't prevent all memory waste. A different issue can still occur, known as internal fragmentation. It happens because most programs do not require memory in exact multiples of the page size. For example, suppose each program in our scenario requires 80 memory units with a page size of 25 units, which translates to 3.2 pages. Since memory is allocated in whole pages, the program will be assigned 4 pages (100 units), even though it only needs 80. This results in 20 units of unused memory per program scattered at the end of their allocated pages. This leftover space is considered lost, even though it's technically allocated.

This phenomenon is called internal fragmentation, and unlike external fragmentation, it is predictable. Assuming memory requests are uniformly distributed, the average unused portion per allocation will be half the page size. This tradeoff is usually acceptable, given the benefits paging brings in terms of simplicity, performance, and protection.

Page Tables

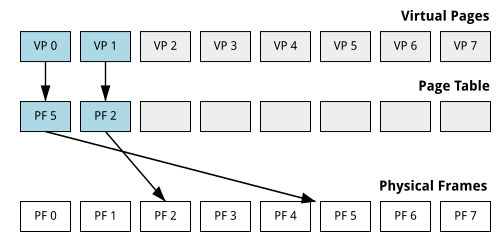

The paging mechanism in modern systems is designed to map virtual addresses to physical memory addresses efficiently. A page table manages the translation between virtual and physical memory. One of the simplest paging systems is the one-level page table, where each entry in the page table directly maps a virtual page to a physical frame. However, this system can be inefficient because it requires allocating a table entry for each possible virtual page, even if a large portion of the address space is unused. The CR3 register in the CPU holds the base address of the current page table, allowing the processor to perform address translations by looking up entries in the page table.

The one-level page table maps each virtual page directly to a physical page frame. However, this approach becomes problematic in systems with an ample virtual address space because even if only a small part of the address space is in use, the entire page table needs to be allocated, which wastes memory.

To overcome this limitation, modern x86_64 systems use a more efficient approach called multi-level paging, which reduces memory overhead and allows for more flexible virtual and physical memory mapping. By dividing the translation process into multiple levels, only the necessary page table levels are allocated for the memory regions that are being used.

x86_64 4-Level Table

In the x86_64 architecture, the 4-level page table system consists of four levels: PML4, PDPT, PD, and PT. Each level of the table contains 512 entries, each 8 bytes in size. The entry in the top-most level, PML4, maps to a 4 GB region of virtual memory, while the entries in the other tables progressively map smaller portions of memory: PDPT maps 2 MB of memory, PD maps 4 MB, and PT maps 4 KB pages.

The virtual address is 48 bits long, and when translated, the address is divided into four parts corresponding to the indices for each page table level, with the last part being the offset within the page.

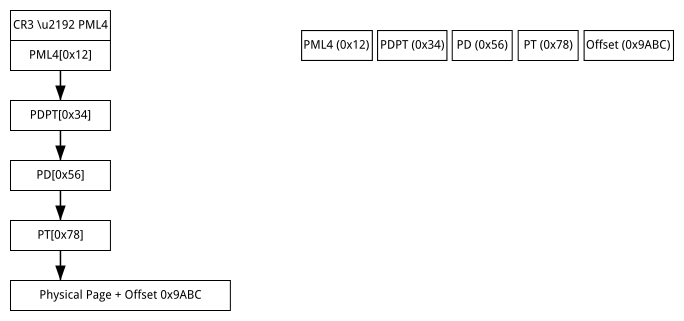

For example, consider the virtual address 0x123456789ABC.

The translation process starts by breaking the address into parts: the

PML4 index, PDPT index, PD index, PT index, and the page offset. Each of

these indices points to the corresponding entry in the respective page

table level. The final 12 bits of the address are the offset, which

points to the exact location within the physical page after the

translation through the page tables.

The translation process is as follows:

- Read the CR3 register to get the PML4 address.

- Load the PDPT table using the PML4[0x12] address.

- Load the PD table using the PDPT[0x34] address.

- Load the PT table using the PD[0x56] address.

- Load the final table using the PT[0x78] address.

- Read the physical address by adding the offset 0x9ABC to the page's physical address.

The primary advantage of this multi-level paging system is its ability to reduce memory consumption for page tables. Unlike a single-level page table that would require entries for every virtual page, the multi-level approach ensures that only the required portions of the address space have page table entries. Additionally, each level breaks down the translation process into smaller chunks, making the overall system more efficient regarding memory allocation.

Modern processors also use the Translation Lookaside Buffer (TLB). This small, fast cache stores recently used virtual-to-physical address translations to speed up memory access by reducing the need to walk through the page tables every time a memory address is accessed.

Conclusion

In this post, we've explored the essential concepts of memory management in x86_64 CPUs, which are crucial for understanding the configuration of the Virtual Machine Control Structure (VMCS) in a minimal hypervisor. We've covered the evolution of memory management techniques, from segmentation to paging, and explained how paging resolves issues like external fragmentation.

While paging eliminates external fragmentation, it introduces the potential for internal fragmentation, which is predictable but unavoidable.

We also discussed how modern x86_64 systems use multi-level page tables to manage memory efficiently, reducing the overhead of allocating unnecessary entries for unused virtual address spaces. With its hierarchical structure, the multi-level paging system enables efficient memory translation with less memory consumption compared to a single-level page table approach. Moreover, the Translation Lookaside Buffer (TLB) further enhances the system's performance by caching recently used translations.